A Pilot Study of Half-Point Increments in Scoring

A Pilot Study of Half-Point Increments in Scoring: Does expanding the scale allow reviewers to better distinguish among high impact proposals?

The current NIH grant scoring system utilizes an integer scale of 1 to 9, with 1 being high impact and 9 being low impact. Applications are assigned to three reviewers who assign preliminary overall impact scores from 1 to 9 and provide written critiques. Approximately the top 50% of applications, based on preliminary overall impact score, are discussed at the review meeting where panel members each assign a final overall impact score. The final priority score for each application is derived from the average of scores assigned by each panel member and multiplied by 10, to result in a score from 10 to 90. In most cases, percentiles are then assigned to R01 applications based on the scores of applications in that study section that review cycle and the two previous review cycles.

The current NIH grant scoring system utilizes an integer scale of 1 to 9, with 1 being high impact and 9 being low impact. Applications are assigned to three reviewers who assign preliminary overall impact scores from 1 to 9 and provide written critiques. Approximately the top 50% of applications, based on preliminary overall impact score, are discussed at the review meeting where panel members each assign a final overall impact score. The final priority score for each application is derived from the average of scores assigned by each panel member and multiplied by 10, to result in a score from 10 to 90. In most cases, percentiles are then assigned to R01 applications based on the scores of applications in that study section that review cycle and the two previous review cycles.

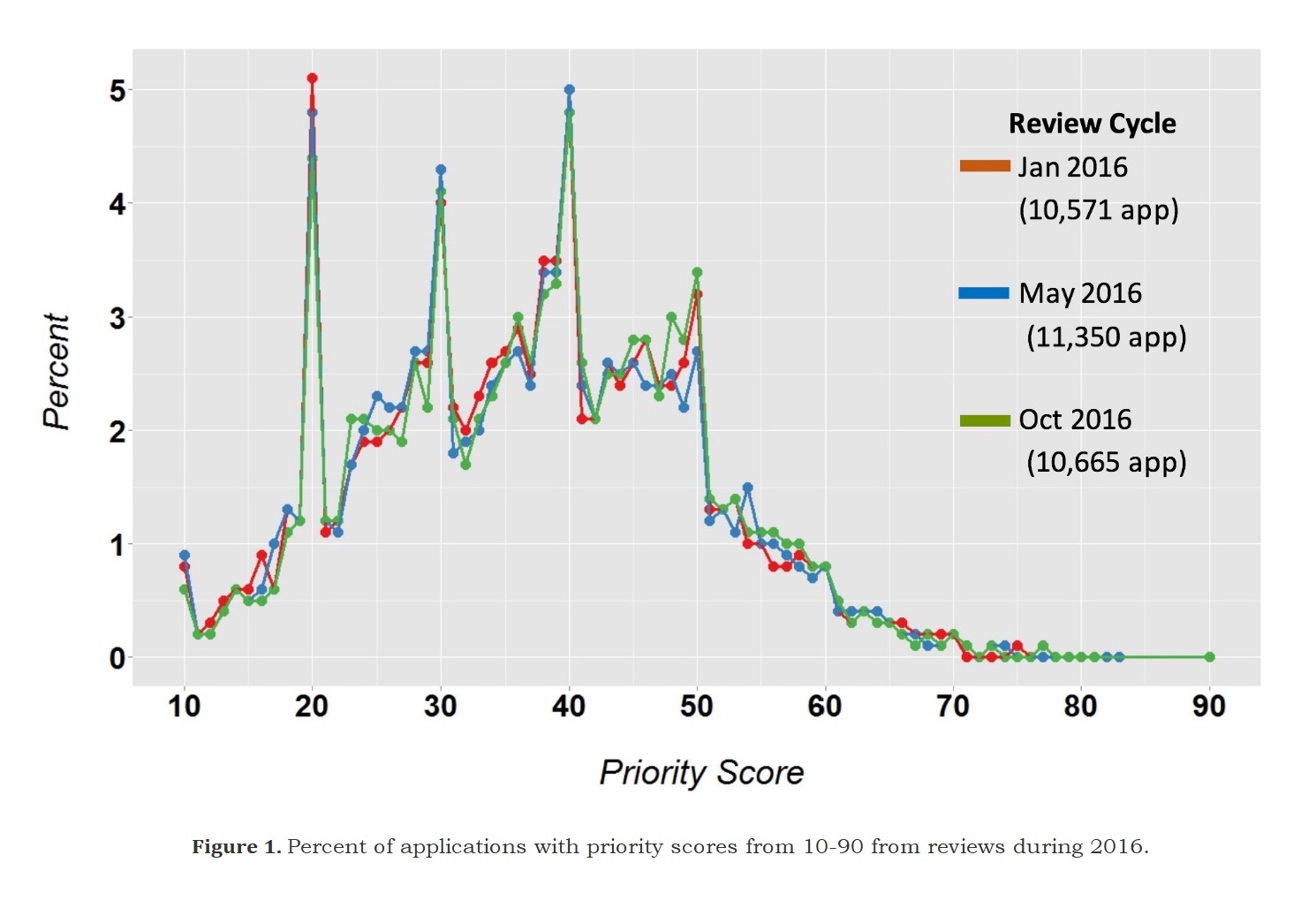

In practice, some reviewers use only a portion of the scoring range in their initial scoring and during meeting discussions, resulting score compression where a large number of applications have scores in the 20-40 range and often with a high number of ties at 20 and 30 (Figure 1).

This problem may be exacerbated by low funding levels. Although reviewers are instructed to assess the merit of an application without concern about pay lines at institutes, score compression might result from reviewer concerns about the potential for an application to be funded. In study sections with severe compression, competitive applications are often scored with only two score choices (1 and 2). Score compression and ties indicate that the review panel did not distinguish among the applications for impact and the lack of clear distinction among applications makes funding decisions more difficult, particularly when several applications receive identical scores and/or percentile ranks within the same study section.

CSR designed a pilot study to test whether allowing reviewers to score applications in half-point increments would allow reviewers to more precisely reflect their assessment of the potential scientific impact of applications, and thereby reduce score compression.

Pilot Design

The pilot study was carried out in parallel with the current scoring system in the May 2016 and October 2016 Advisory Council review cycle. Thirty-three study sections in the May 2016 review cycle and 11 study sections in the October 2016 review cycle participated.

Following the discussion of applications, the assigned reviewers set the range with integer scores following standard guidance. All reviewers entered integer scores for the official scoring of applications. Reviewers were provided with separate score sheets that included a column for unofficial half-point scores ranging from 0.5 points below their official score to 0.5 points above their official score. For example, if a reviewer had scored an application with a 2 officially, the reviewer could enter 1.5, 2.0 or 2.5 in the half-point column. A score of 1 remained the best possible score; a score of 0.5 was not allowed.

This second set of scores were then used to calculate alternate scores for each application to compare to the official scores. All analyses were produced for internal purposes only and alternate scores were not communicated to either investigators or to program officials.

Results

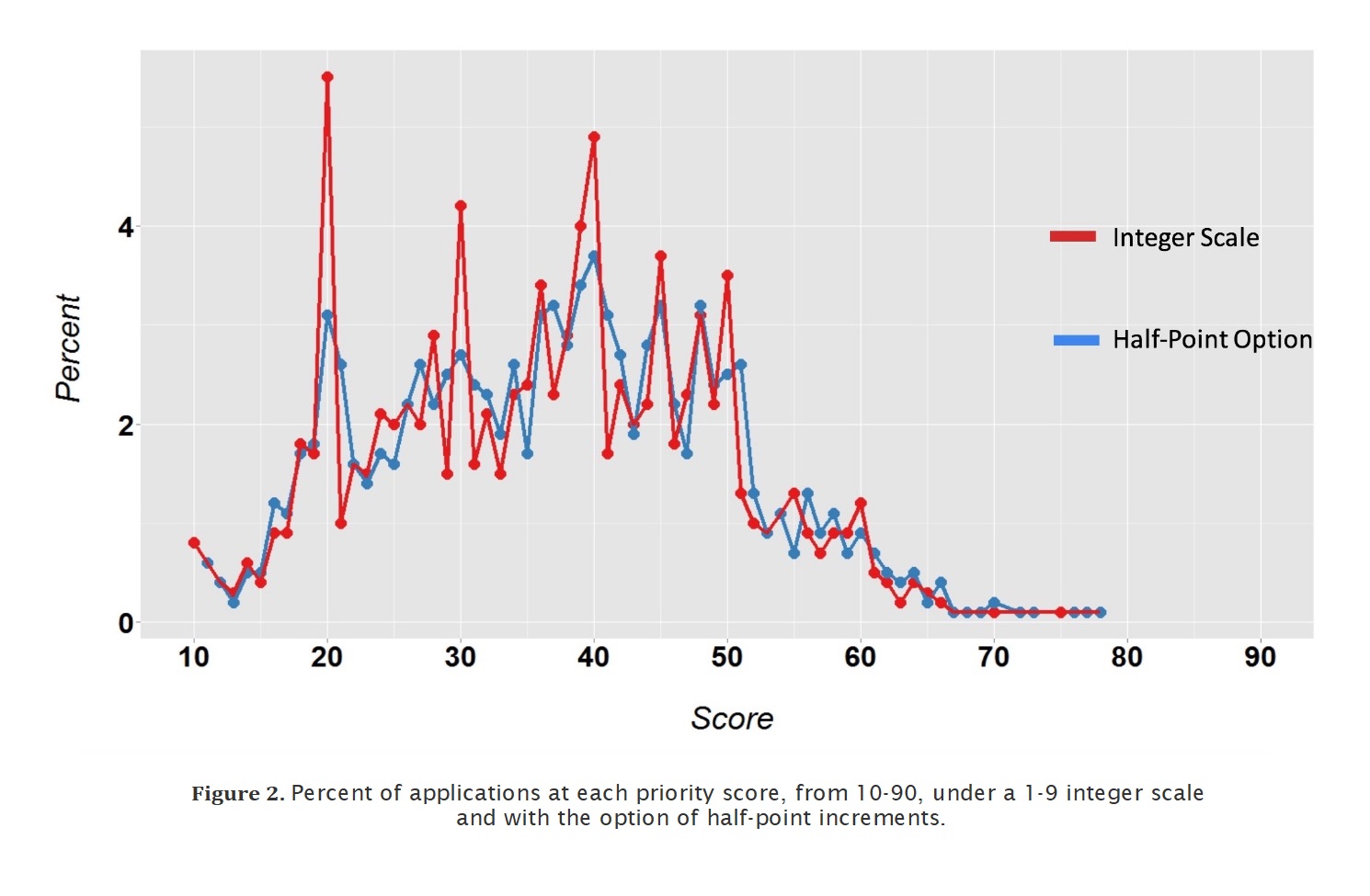

Scores calculated from half-point data were compared to the official scores for 1,371 discussed applications from 39 study sections. The percentage of scores at 20, 30 and 40 were reduced when reviewers were given the halfpoint option. Score compression was also improved for a few study sections that were highly compressed based on the official scores. However, the effect was minimal and new peaks formed near 2.5 and 3.5 (Figure 2).

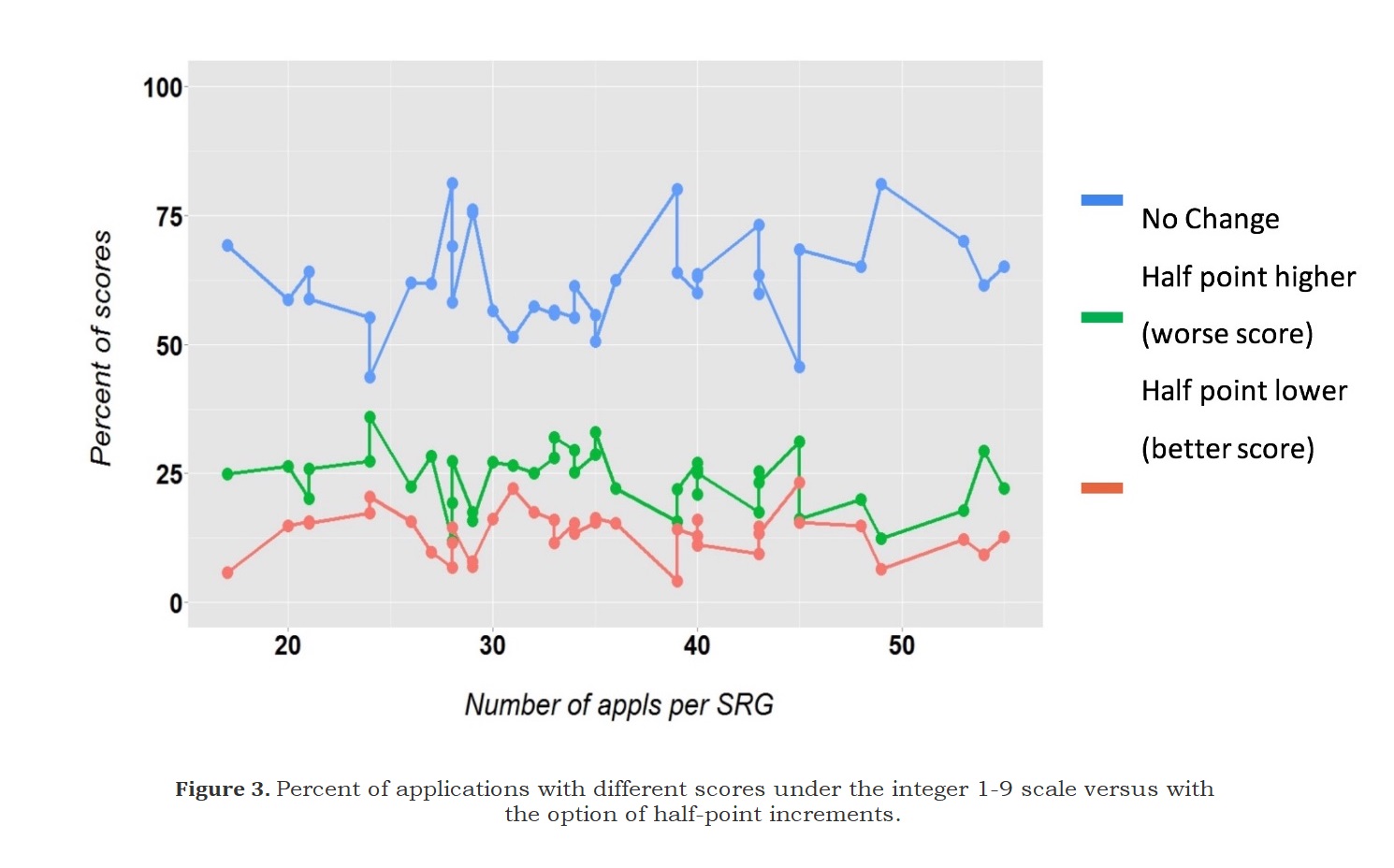

For each of the 39 study sections, we calculated the percentage of applications for which scores differed between the official score and the score based on half-point options. The percentage of applications with a different score ranged from 40% to 80%, depending on the study section. Of those that did change, reviewers were more likely to assign a worse score than a better score (Figure 3).

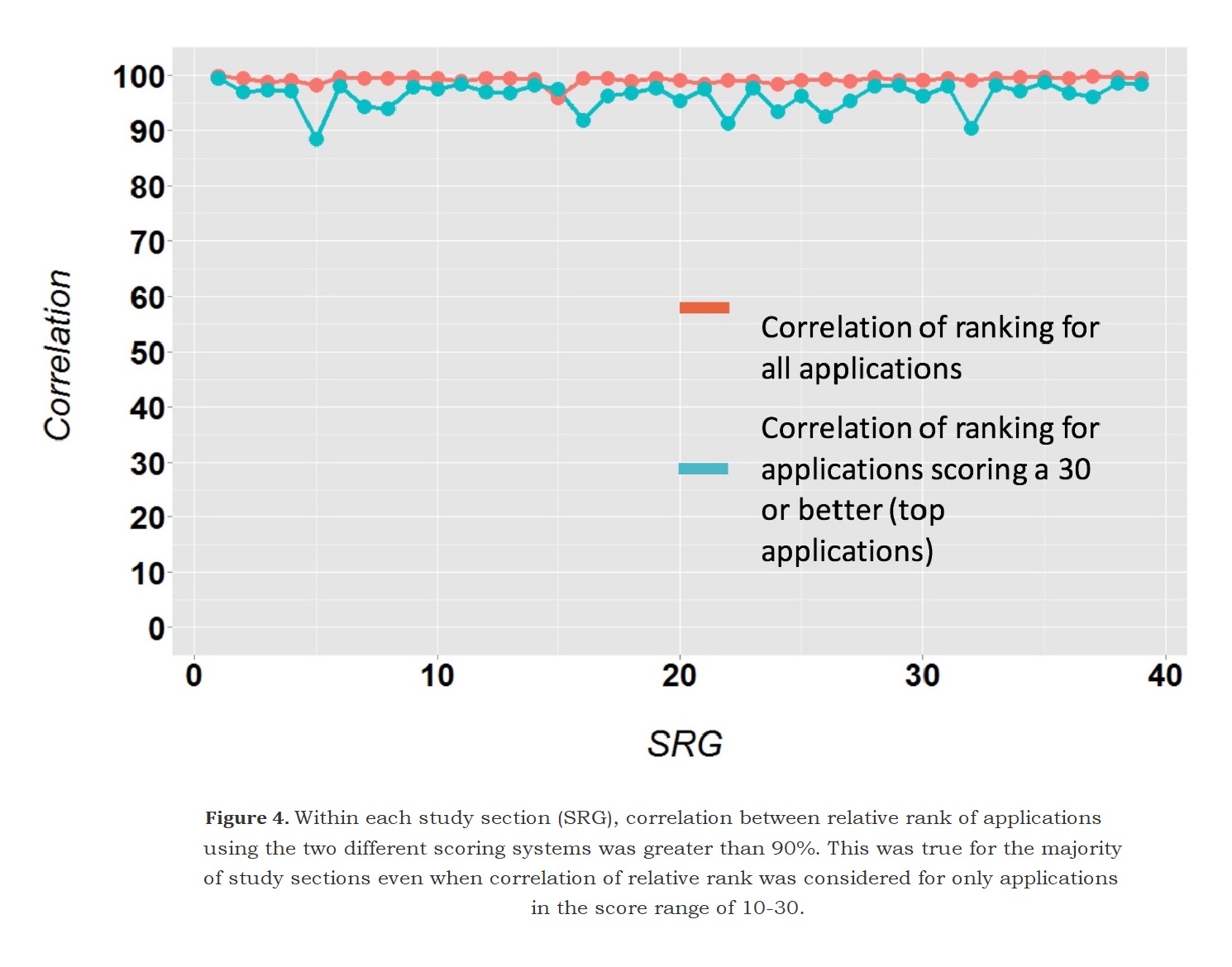

We also investigated whether the half-point scoring option affected the relative ranking of proposals. Correlation between the rank order of applications using half-point or an integer scale was 90% or greater for all study sections (Figure 4).

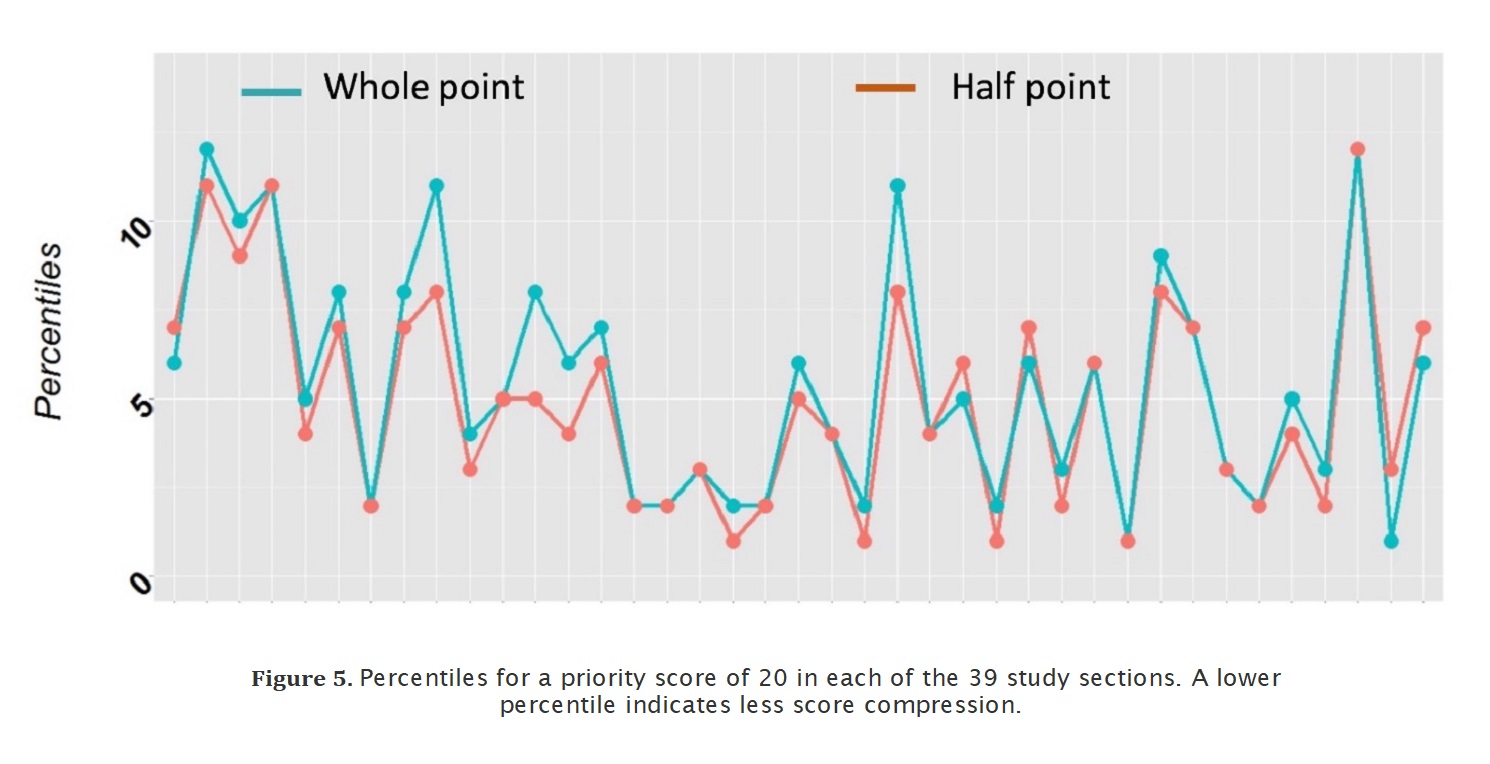

Although the initial analyses indicated minimal effect of a half-point option on score compression, we analyzed the data further for compressed scores in the high impact range. We examined percentiles for priority scores of 20, under the integer-scoring system and when half-point increments were an option (Figure 5). Score compression was reduced in 49% of the study sections, increased in 13%, and unchanged in 38% of study sections

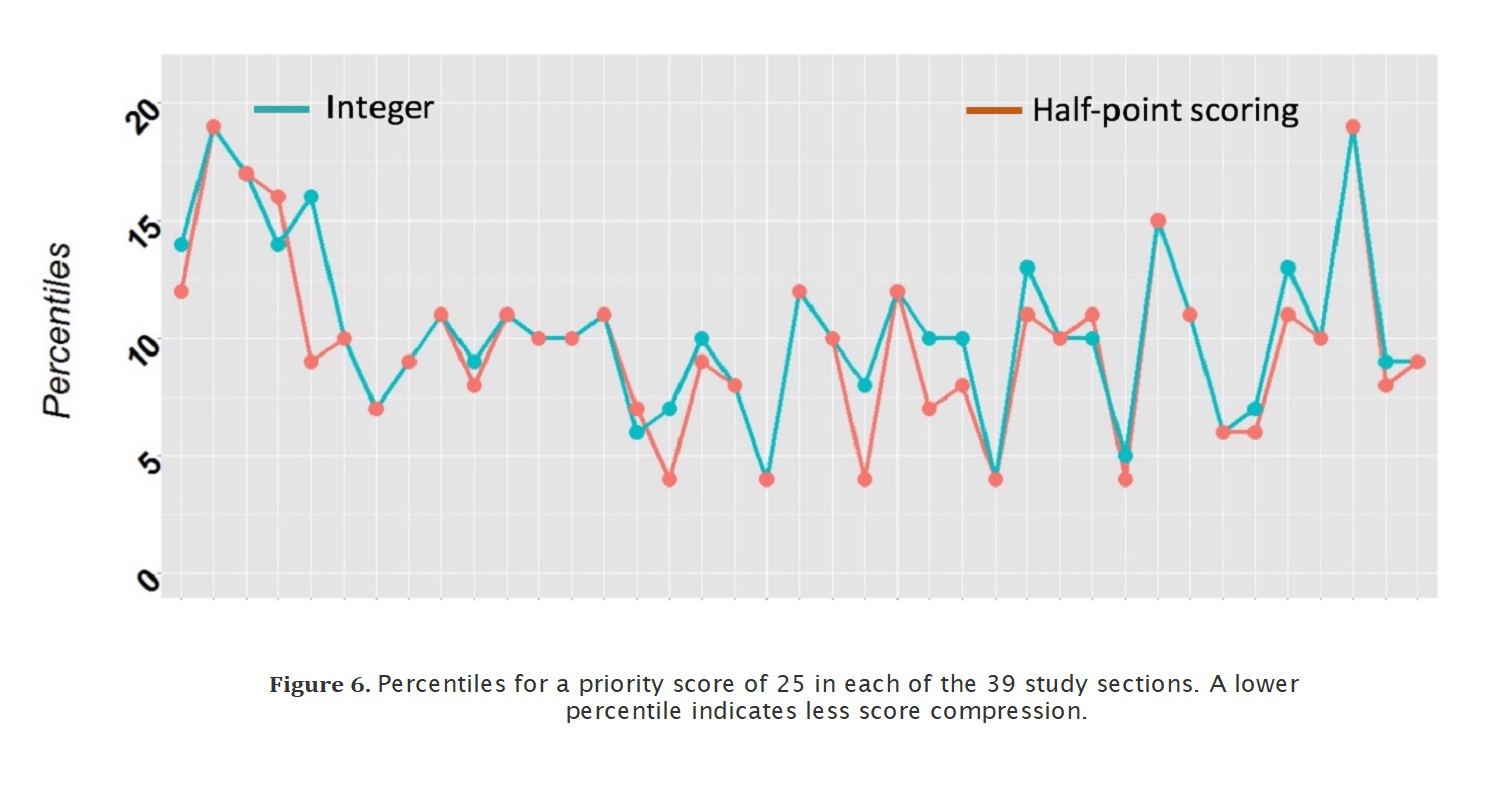

In examining applications with a priority score of 25 (Figure 6), compression was reduced in 33% of the study sections, increased in 8%, and unchanged in 59% of study sections.

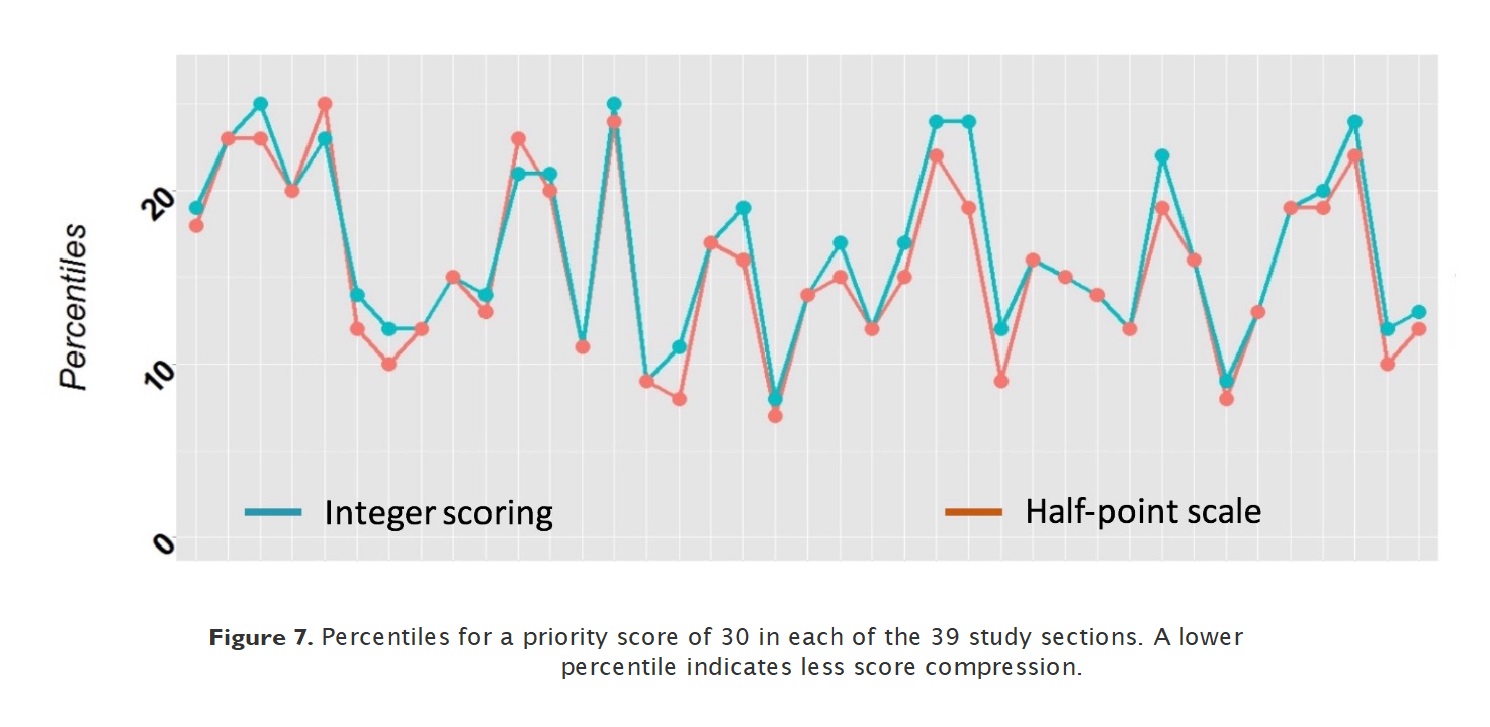

For applications with a priority score of 30 (Figure 7), compression was reduced in 54% of study sections, increased in 5% of study sections, and unchanged in 41% of study sections.

Conclusions

Providing more scoring options by allowing half-point increments had a minimal effect on score compression. When examined across the full range of official scores from 1 to 9, the percentage of applications tied at priority scores of 20, 30, and 40 was slightly reduced but new ties presented near 25 and 35. Across the score range, correlation between the official score and that derived with a halfpoint options was greater than 90%, indicating limited potential for a half-point scale to better differentiate between applications. Even when data were examined for applications with official priority scores of 20, of 30, or of 40 – the range of scores where ties are most common – differences were minimal. All the data indicate that use of a score range with half-point increments does not allow reviewers to better distinguish among the high impact applications and is, therefore, of limited utility.

Acknowledgments

- Dr. Mary Ann Guadagno, SRO, Office of Planning, Analysis and Evaluation

- Dr. Adrian Vancea, Program Analyst, Office of Planning, Analysis and Evaluation

- Dr. Ghenima Dirami, Scientific Review Officer (SRO)

- Dr. Gary Hunnicutt, SRO

- Dr. Raya Mandler, SRO

- Dr. Suzanne Ryan, SRO

- Dr. Atul Sahai, SRO

- Dr. Jeff Smiley, SRO

- Dr. Wei-qin Zhao, SRO

- Sara Ahlgren, CDD

- Nuria Assa-Munt, BBM

- David Balasundaram, NCSD

- Aruna Behera, SBDD

- Catherine Bennett, SCS

- John Bleasdale, MCE

- John Burch, CMAD

- Ghenima Dirami, LIRR

- Bahiru Gametchu, HAI

- Ruth Grossman, BMIT-A

- Gary Hunnicutt, CMIR

- Alexi Kondratyev, CDIN

- Svetlana Kotliarova, CDP

- Chee Lim, ESTA

- Lee Mann, BMIO

- Dan McDonald, SBSR

- Eduardo Montalvo, AOIC

- Suzan Nadi, CNNT

- Angela Ng, TME

- Maria Nurminskaya, MRS

- Larry Pinkus, VCMB

- Bree Rahman Sesay, BMCT

- Anna Riley, PDRP

- Amy Rubinstein, GDD

- Suzanne Ryan, SSPA

- Soheyla Saadi, CRFS

- Atul Sahai, PBKD

- Geoff Schofield, BPNS

- Marci Scidmore, BACP

- Mike Selmanoff, NNRS

- Bukhtiar Shah, HT

- Denise Shaw, CII

- Hilary Sigmon, ACE

- Elena Smirnova, CSRS

- Biao Tian, MFSR

- George Vogler, BGES

- Manzoor Zarger, CAMP

- Wei-Qin Zhao, LAM

- Aiping Zhao, GMPB